Centralized logging is a cornerstone of any professional IT or DevOps setup. Azure Log Analytics (LAW) / Azure Monitor provides a powerful platform not only for collecting native Azure logs, but also for aggregating custom application or automation logs.

In this post, I’ll show you how to send custom log entries directly from e.g. PowerShell into a Log Analytics Workspace using the HTTP Data Collector API, while also giving an overview of the most common ways data usually reaches LAW.

Contents

❔ Why Custom Logs?

Many people know Azure Monitor primarily through:

- Activity Logs – administrative and operational events

- Metrics & Resource Logs – collected via Diagnostic Settings

- VM Logs – collected via the Azure Monitor Agent (AMA)

- Custom Logs – often via DCR (Data Collection Rules)

…but LAW is much more flexible. You can push logs from virtually any source, including your own applications or scripts. This is where the HTTP Data Collector API shines, allowing you to send JSON payloads directly to your workspace.

⚙️ Common Ways Logs Reach Log Analytics

| Source | Method | Description |

| Azure Resources | Diagnostic Settings | Sends resource logs & metrics |

| Virtual Machines | Azure Monitor Agent (AMA) | Host and performance data |

| Custom Logs | DCR / Custom Tables | Read local log files or structured JSON |

| External / Apps | HTTP Data Collector API | Push JSONs from scripts or applications |

For developers and DevOps engineers, the HTTP Data Collector API is very powerful because it allows any application that can make HTTP requests to log into LAW.

🤖 HTTP Data Collector API Overview

The API is a simple REST endpoint for your workspace:

- Authentication: Shared Key (Primary or Secondary)

- Payload: JSON array of objects

- Headers: specify log type, timestamp, and authorization

- Use case: custom application logs, automation scripts, CI/CD feedback, or any scenario where native Azure logs aren’t available

⚒️ Setting Up Your Log Analytics Workspace

Before you can send any custom log data, you’ll need to have an existing Log Analytics Workspace and obtain two key values:

- The Workspace ID

- The Primary Key (Shared Key)

Here’s how to get them:

Step 1: Create a Log Analytics Workspace

- In the Azure Portal, search for “Log Analytics Workspaces” and click Create.

- Choose:

- Subscription – your active subscription

- Resource Group – existing or new one

- Name – e.g., my-law-demo

- Region – select your preferred region

- Click Review + Create, then Create.

Once deployed, open the workspace.

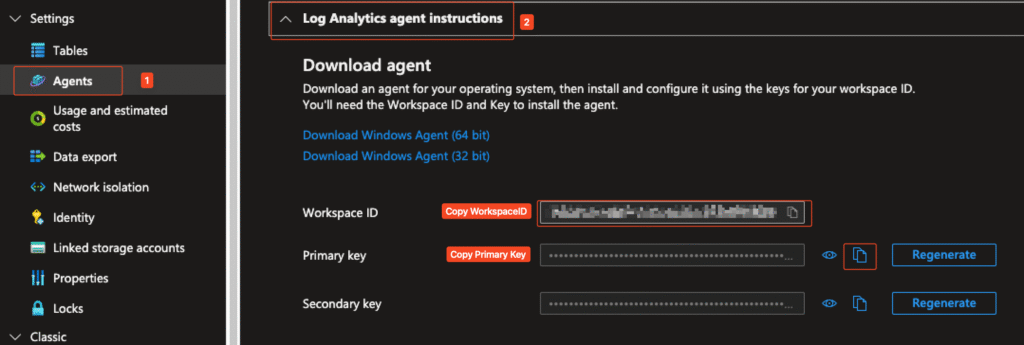

Step 2: Retrieve Workspace ID and Primary Key

- Inside your workspace, go to the Agents section on the left-hand menu.

- Under Log Analytics agent instructions, you’ll find:

- Workspace ID

- Primary Key

- Secondary Key

- Click Copy Workspace ID and Copy Primary Key to retrieve the values. These are used in the PowerShell script as $CustomerId and $SharedKey.

💡 Security Tip: Never store this key in plain text in scripts or repositories.

In production, store it securely in Azure Key Vault and retrieve it programmatically when needed.

📋 PowerShell Example: Sending a Custom Log Entry

Below is a complete PowerShell function that sends a single log entry to a Log Analytics workspace. I’ve included comments to explain each step.

Full code: GitHub/Add-CustomMonitorLog.ps1

function Send-LogAnalyticsEntry {

param(

[Parameter(Mandatory=$true)]

[string]$CustomerId,

[Parameter(Mandatory=$true)]

[string]$SharedKey,

[Parameter(Mandatory=$true)]

[object]$LogEntry,

[Parameter(Mandatory=$true)]

[string]$LogType,

[string]$TimeStampField = ""

)

try {

# Convert log entry to JSON

$json = @($LogEntry) | ConvertTo-Json -Compress

$body = [System.Text.Encoding]::UTF8.GetBytes($json)

# Prepare API call

$method = "POST"

$contentType = "application/json"

$resource = "/api/logs"

$rfc1123date = [DateTime]::UtcNow.ToString("r")

$contentLength = $body.Length

# Build authentication signature

$xHeaders = "x-ms-date:" + $rfc1123date

$stringToHash = $method + "`n" +

$contentLength + "`n" +

$contentType + "`n" +

$xHeaders + "`n" +

$resource

$bytesToHash = [Text.Encoding]::UTF8.GetBytes($stringToHash)

$keyBytes = [Convert]::FromBase64String($SharedKey)

$sha256 = New-Object System.Security.Cryptography.HMACSHA256

$sha256.Key = $keyBytes

$calculatedHash = $sha256.ComputeHash($bytesToHash)

$encodedHash = [Convert]::ToBase64String($calculatedHash)

$signature = "SharedKey ${CustomerId}:${encodedHash}"

# Build request

$uri = "https://${CustomerId}.ods.opinsights.azure.com${resource}?api-version=2016-04-01"

$headers = @{

"Authorization" = $signature

"Log-Type" = $LogType

"x-ms-date" = $rfc1123date

"time-generated-field" = $TimeStampField

}

# Send log entry

$response = Invoke-WebRequest -Uri $uri `

-Method $method `

-ContentType $contentType `

-Headers $headers `

-Body $body `

-UseBasicParsing

if ($response.StatusCode -eq 200) {

Write-Verbose "Log entry sent successfully"

return $true

}

else {

Write-Warning "Unexpected status code: $($response.StatusCode)"

return $false

}

}

catch {

Write-Error "Error sending log entry: $_"

return $false

}

}Example Usage:

# Demo configuration (replace with your workspace ID and key for real use)

$workspaceId = "your-workspace-id"

$workspaceKey = "your-primary-key"

# Create a log entry

$logEntry = @{

Timestamp = Get-Date -Format "yyyy-MM-ddTHH:mm:ssZ"

Level = "Information"

Message = "Application started successfully"

}

# Send it

Send-LogAnalyticsEntry -CustomerId $workspaceId `

-SharedKey $workspaceKey `

-LogEntry $logEntry `

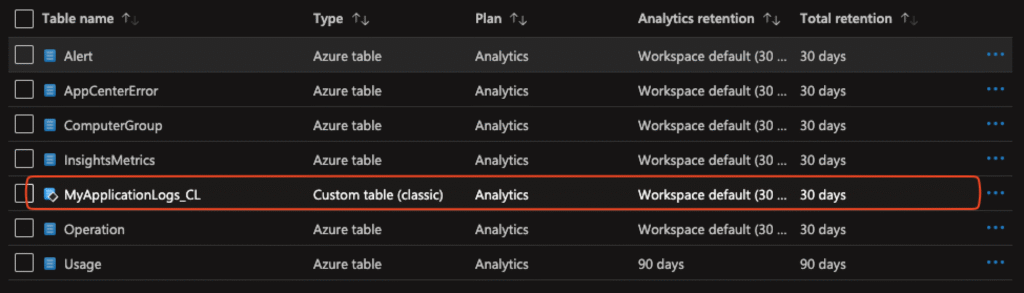

-LogType "MyApplicationLogs"After sending the first log entry the table get created automatically:

Note: This example uses a placeholder Primary Key. In production environments, you should never hardcode keys as I said. Use Azure Key Vault or environment variables for secure storage, e.g.:

$kvSecret = Get-AzKeyVaultSecret -VaultName "MyKV" -Name "LAWPrimaryKey"

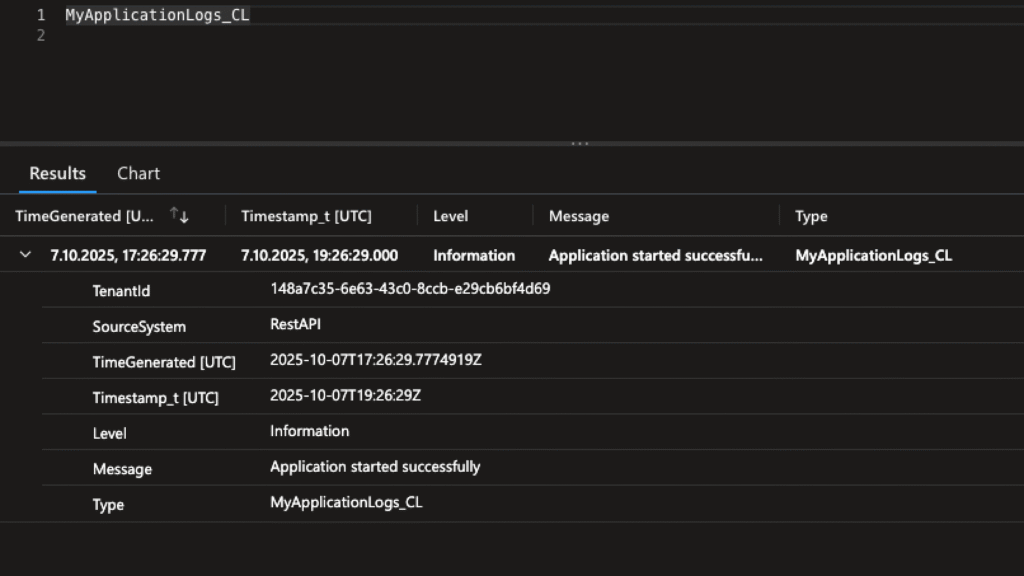

$SharedKey = $kvSecret.SecretValueText✅ Validating in Log Analytics

Once the log is sent, you can query it in your workspace using Kusto Query Language (KQL):

MyApplicationLogs_CL

| take 5

This will display the latest 5 entries from your custom log table.

💡 Why This Approach Is Useful

- Universal: Push logs from any source, not just Azure services

- Centralized: All logs in one place, allowing alerts, dashboards, and integration with Sentinel or Power BI

- Flexible: Works with scripts, CI/CD pipelines, applications, or IoT devices

- Programmable: PowerShell (or any language that can make HTTP requests) can automate log generation

🧠 Conclusion

Azure Log Analytics is far more than a default Azure logging platform. With the HTTP Data Collector and a small PowerShell (or an other programming language of your choice) wrapper, you can turn it into a universal logging hub for your organization or projects.

Whether you’re collecting application telemetry, automation events, or custom metrics, this approach gives you full control and flexibility, while still benefiting from Azure’s monitoring ecosystem.